Ever since ChatGPT was first released nearly two years ago, students and teachers alike have been grappling with the question: is Artificial Intelligence (AI) a powerful tool that enhances education, or a crutch that cheats students out of deeper understanding?

Although AI has been heavily associated with academic dishonesty, some students have been considering AI’s potential as an ethical resource for learning. Senior Audrey Johnson finds AI useful for saving time when studying.

“I see students using AI primarily as a tool to make our studying more efficient,” Johnson said. “If you just want notes to review for a test later, you can save a lot of time by uploading a PDF of the textbook and having [an AI model] take notes for you.”

But while some students see AI as a time-saver, educators like computer science teacher Christina Wade worry that this convenience can incentivize students to take further advantage of these tools at the cost of critical thinking skills.

“… there are times when student usage of [AI] is good, but a lot of times students … instead of grappling with [problems] for a little bit and having that productive struggle, they try a little bit, and if they don’t get it right away, they go to AI for answers,” Wade said.

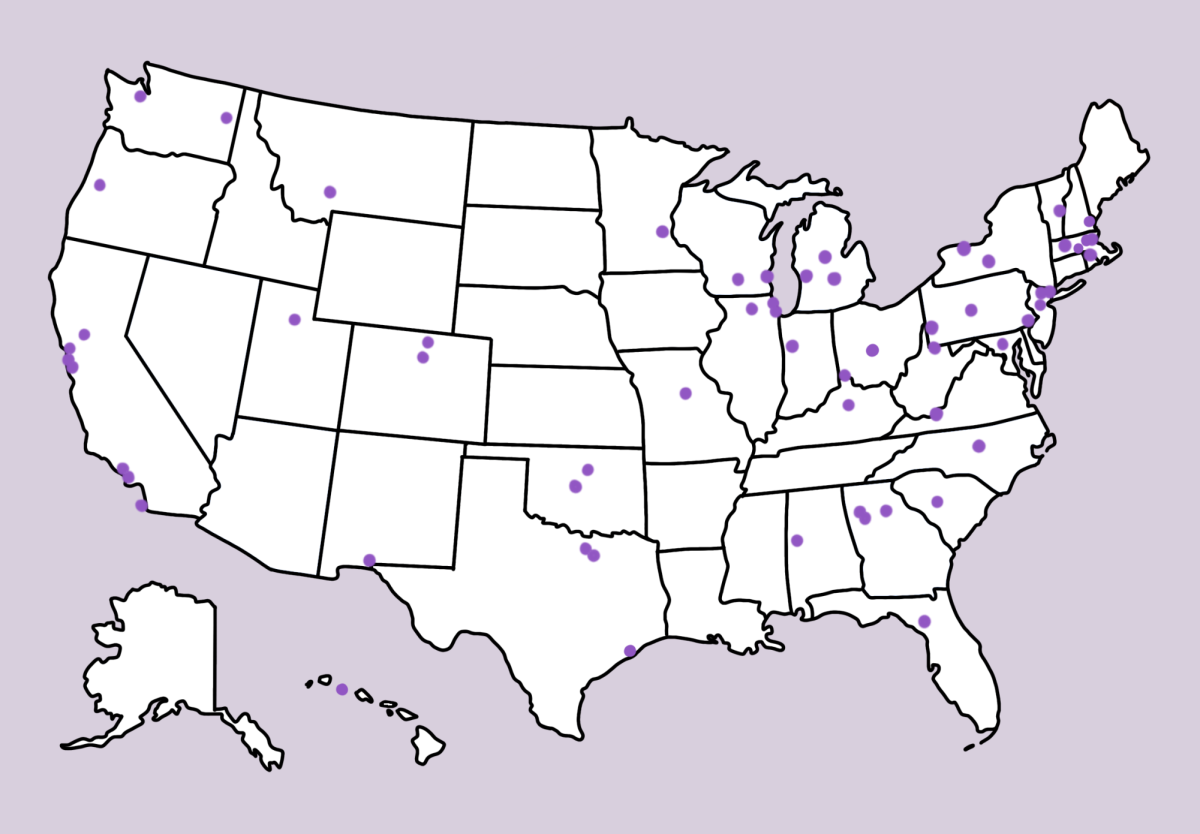

While teachers worry that AI may limit students’ ability to engage in “productive struggle,” some are exploring how the technology can be used to enhance learning. In fact, a survey conducted last spring by Burlingame’s AI Club found that 37% of staff respondents had used AI to generate content for their classes.

According to the San Mateo Union High School District (SMUHSD)’s Instructional Technology Coordinator Dominic Bigue, the district has taken steps to educate teachers about possible uses and the impact of AI in classrooms.

“We hosted a professional development session for teachers last spring who were interested in learning about how AI could be used as a teaching assistant,” Bigue said. “Currently, we’re starting something called the AI Fellowship, which is for teachers to learn more about the impact of AI on teaching.”

On Sept. 12th, OpenAI released the o1-preview and o1-mini AI models, the latest in a series of groundbreaking generative AI advancements. These new o1 models have been trained to take more time before responding, with the hopes that they yield more refined responses. Recent Burlingame graduate and current College of San Mateo student David Rabinovich finds the new o1 models extremely useful, particularly in his math classes.

“For problems I would do, almost [never] would any AI get them right. This is the first time that I’ve ever seen AI be able to … do a really good job of helping me walk through how to do a problem,” Rabinovich said.

Given how effective these AI-generated explanations can be, it is natural to wonder if this technology can be used to tutor students in need of help. Senior Ryan Wang believes that despite the advancements being made, AI lacks the humanity to be an effective tutor.

“Every tutee is different, but AI models are all the same. You have to really have this humanity aspect of it — you have to have a heart [and] empathy,” Wang said.

Furthermore, Bigue points out that responses from AI models have been known to reflect the biases present in their training data.

“What AI will do sometimes is that when you ask it, for instance, to identify images of doctors, what it returns or can return is a list of doctors or images that follow stereotypes,” Bigue said.

Wade believes that the conversation shouldn’t focus on resistance but rather adaptation.

“I think that there’s a perception that teachers are against AI and that we don’t want students using it, and I don’t think that’s the case. We just want students to use it responsibly and to actually learn stuff,” Wade said. “… [we] can’t stop this moving train. It’s coming … so we just have to learn to adapt.”